Last year I had a lot of free time to try out some of the things that had been lingering on my ever growing to-do list over the years and top among them was Huginn which describes it self as:

“Huginn is a system for building agents that perform automated tasks for you online. They can read the web, watch for events, and take actions on your behalf. Huginn’s Agents create and consume events, propagating them along a directed graph. Think of it as a hackable version of IFTTT or Zapier on your own server. You always know who has your data. You do.”

This value proposition, to own your own data and be able to configure the tasks in any way you see fit, has always sounded appealing to me ever since I first heard about the project back in ‘13 (my todo lists are indeed very long).

Initial setup Link to heading

Having maintained my fair share of hobby VPS in the past I knew that I didn’t want to go the old way of setting this new system up, and so decided that I would use the opportunity to get some hands on experience with Docker (something which has also lingered on my todo list for years), to keep the whole thing more modular and reproducible.

It just so happens that I was able to find rather good instructions from ByteMark, a hosting company based out of Britain, and so decided to base my setup on theirs.

There have been some changes in how some of the tools used there function since this tutorial was written, so it did take a weekend for me to get everything configured correctly. But in the end I had my own personal dockerized Huginn instance up and running, along with traffic analysis and certificates.

Basic Agents Link to heading

Now that I had the whole thing setup it was time to start looking into what kind of automation I was actually going to use this for.

As I use public transport each morning (when the world isn’t in the grips of a global viral pandemic) I thought a good task would be to grab the local weather forecast and have it be delivered to me in a digest email in the mornings before I left for work.

It turned out to be extremely easy to set up a scraping agent, and by using some xpaths I was easily able to retrieve the values and have them be delivered in a html digest email to me each morning. This simple task was a good starting off point as it taught me more about how Huginn functions, and how every agent deals with inputs and outputs, how it all of the data being thrown around is simply streams flowing between the agents.

Before I had started this whole project, I had been wondering if I would be able to set up automatic scraping for my investment portfolio, which included some funds, stocks and bonds. And seeing how easy it had been to scrape the weather forecast I thought it wouldn’t take much more effort to scrape a few public facing numbers and format them.

More advanced Agents Link to heading

For my first attempt, I was just going to scrape the data from the public facing webpages for the specific funds I had in mind. But I soon found out that the values displayed were aggregated in such a manner that I wouldn’t be able to get the fidelity that I wanted that way.

So I decided to simply try to get the same raw data that the page uses, and low and behold, the network calls were rather simple to reproduce and gave be the ability to tailor my range as I wished. And the tokens used there have only ever changed twice in the last 6 months, and so can easily be manually grabbed and stored in Huginn’s own credential storage.

The data received from these endpoints is simple, only the index values at dates within the specified range.

{"data":[[1.6136064e12,1993.39],[1.6136928e12,1993.43],[1.613952e12,1992.49],[1.6140384e12,1992.53],[1.6141248e12,1993.86],[1.6142112e12,1993.07],[1.6142976e12,1992.33],[1.6145568e12,1991.73],[1.6146432e12,1991.57],[1.6147296e12,1995.31],[1.614816e12,1995.9],[1.6149024e12,1994.76],[1.6151616e12,1994.58],[1.615248e12,1994.98],[1.6153344e12,1995.63],[1.6154208e12,1997.73],[1.6155072e12,1996.14],[1.6157664e12,1996.84],[1.6158528e12,1997.26],[1.6159392e12,1997.07],[1.6160256e12,1996.54]]}

The data needed to be processed into something more understandable before being sent in the digest emails. But how does Huginn allow data processing within agents?

There are a few options available. You can execute bash scripts, piping data in and receiving some back, if you like. And in fact the task which fetches the payload above does so through a bash script, due to the fact that agents can not do sequences of things in one go (you can chain multiple agents together and pipe the data through each and collect the results, but one agent can only have one output at a time).

There is always the option of writing your own agent in Ruby, but who has time for that.

But the most standard way is to simply convert the data to JSON and use jq.

JQ Link to heading

jq, which describes it self as "..like sed for JSON data", is a JSON stream manipulator. I would highly recommend trying it out on jq-play alongside looking at their fantastic manual. It is a really powerful tool for anyone to have in the toolbox.

It allows for some fairly complex processing of large sets of JSON data using very compact expressions.

For instance, the payload above can be formatted using the following expression

{date: .data[-1][0] | (. / 1000 | strftime("%Y-%m-%d")), current_index: .data[-1][1], day_change: (.data | map(.[1]) | (.[-1] - .[-2]) / .[-1]), week_change: (.data | map(.[1]) | (.[-1] -.[-7] ) / .[-1]), month_change: (.data | map(.[1]) | (.[-1] -.[0] ) / .[-1])}

which will then output the following

{

"current_index": 1311.94,

"date": "2021-03-18",

"day_change": 0,

"month_change": 0.00044971568821755987,

"week_change": 9.908989740392787e-05

}

And for a bit more formatting to make it look human readable, it can go into the following expression

{name, date, current_index, day_change: (.day_change | .*100 | tostring | .[0:4] | (. + \"%\")), week_change: (.week_change | .*100 | tostring | .[0:4] | (. + \"%\")), month_change: (.month_change | .*100 | tostring | .[0:4] | (. + \"%\")) }

giving the data its final format of

{

"current_index": 1311.94,

"date": "2021-03-18",

"day_change": "0%",

"month_change": "0.04%",

"name": "....",

"week_change": "0.00%"

}

These formatted values are then piped into other tasks that format them in HTML before being collected in preparation of being sent in a scheduled digest email.

The task graph Link to heading

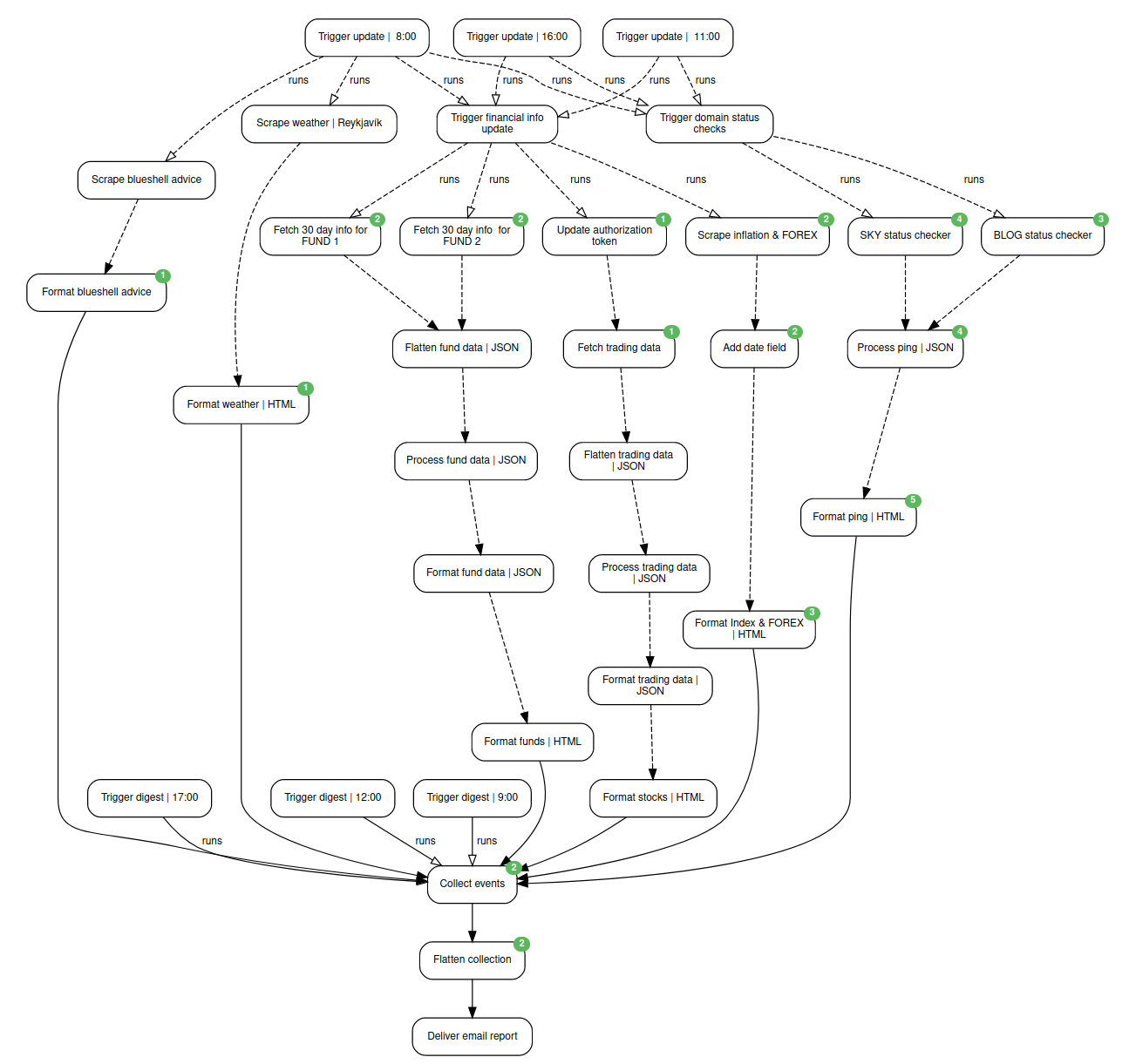

In the end I had created a task graph which grew to contain agents doing various different tasks, such as:

- Infrastructure pings and stats for some of my VPS

- The advice on toxicity level of the local muscles (I love going and picking muscles in Hvalfjörður, but would rather not be poisoned by them)

- The morning weather forecast in Reykjavík

- Investment fund information collection

- Stock information collection

- Relevant foreign exchange numbers against the Icelandic króna

- Inflation values, as well as some other official indexes, in Iceland

All of which was then delivered in three separate emails during the day.

And the task graph it self then looks like this

Worth it? Link to heading

It took a lot of time and effort to figure out how Huginn functions, and while I really enjoyed learning how to use jq (and will continue to use it on various projects in the future) I am not sure I fully enjoy the way the whole thing fits together in Huginn.

Then there is the issue of there not being a way of exporting agents, apart from either doing a DB migration or manually copying each agent by hand. This in particular makes me not want to invest more time in setting up further agents in case I would need to migrate at some point.

The whole system also runs rather hot, so hot in fact that I started seeing my 1CPU/1GiB node collapse when running my graph three times a day. As the amount of processing being done is minimal, and the data load is not high, I can only assume that there are plenty of optimizations left to be done in the system it self.

In hindsight, I am not so sure I would recommend setting up a Huginn instance to those who want to do this kind of automation as their personal side project. For a commercial entity, and where multiple users need to be supported, I can see the time and effort being worth it.

I think I will take what I learned and simply set up some bash scripts and utilize them in conjunction with jq and cron to achieve the same results on my own personal home tower.

At least I can move Huginn from my todo list, and into done.